XAI and Adversarial ML

XAI and Adversarial ML

Objective: We aim to make deep neural networks robust against different kinds of adversarial and attributional attacks. We further investigate the connection between robustness and explainability. We also explore adversarial attacks on Deep Reinforcement Learning and Graph Neural networks.

Related Publications:

-

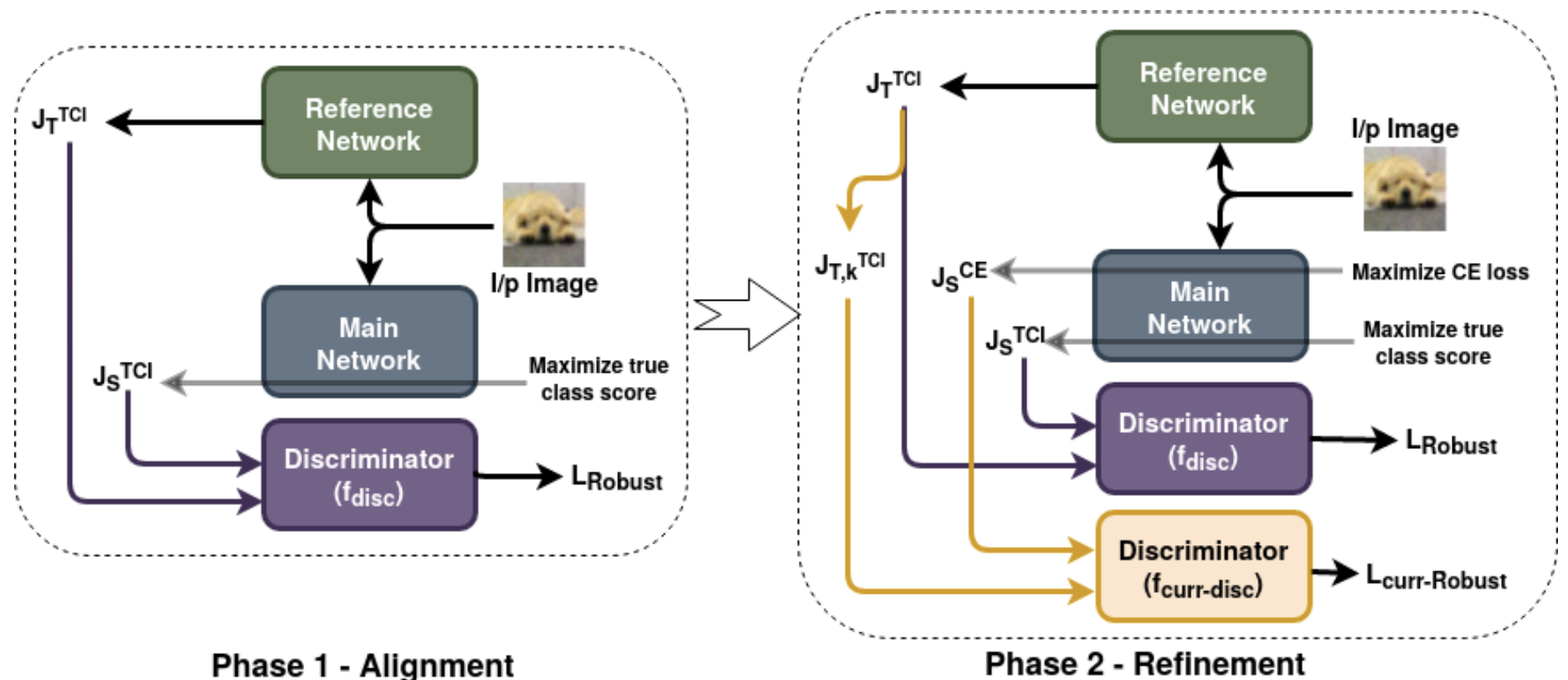

Get Fooled for the Right Reason: Improving Adversarial Robustness through a Teacher-guided Curriculum Learning Approach (NeurIPS 2021)

-

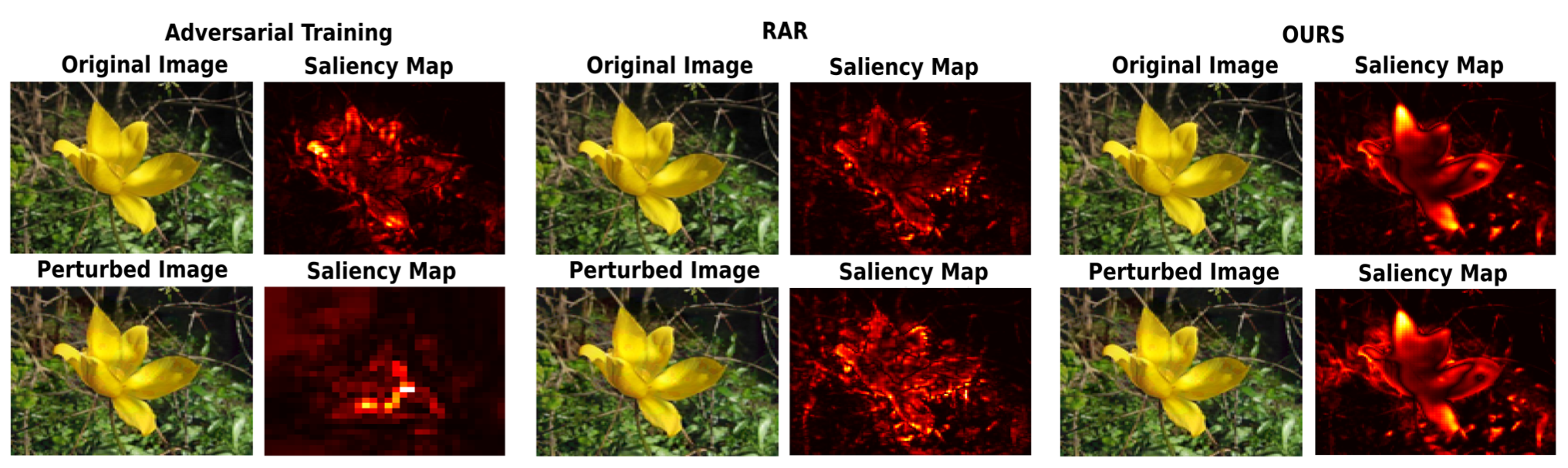

Enhanced Regularizers for Attributional Robustness (AAAI 2022)

-

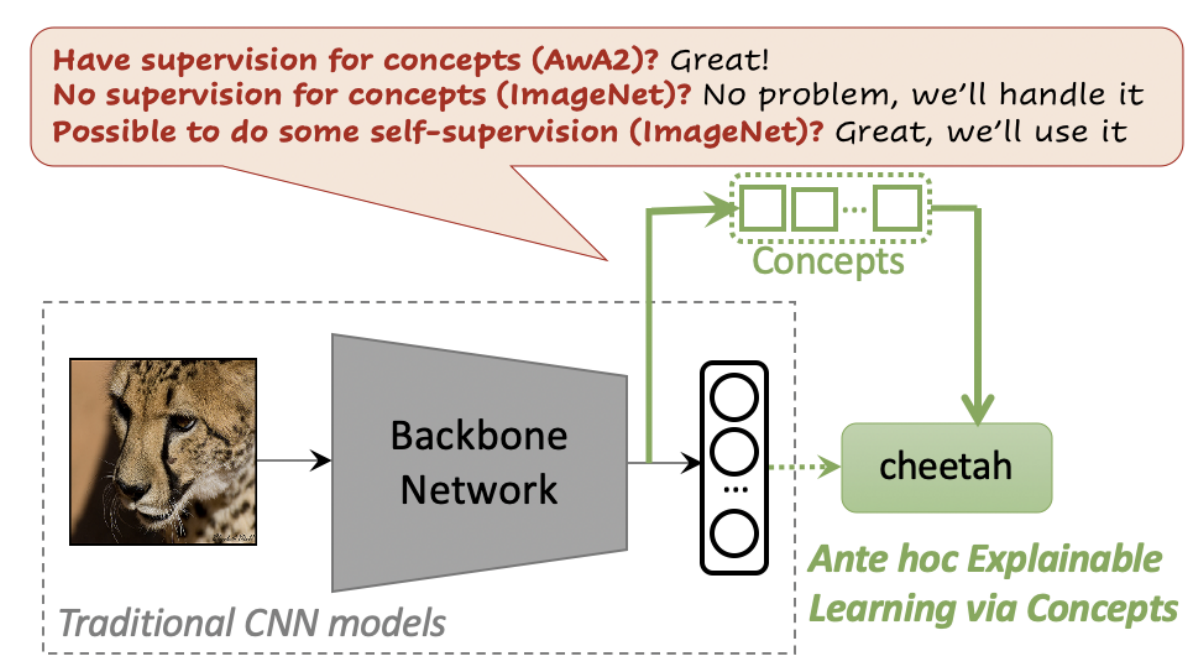

A Framework for Learning Ante-hoc Explainable Models via Concepts (CVPR 2022)

-

Leveraging Test-Time Consensus Prediction for Robustness against Unseen Noise (WACV 2022)

-

Reward Delay Attacks on Deep Reinforcement Learning (Gamesec 2022)

-

Empowering a Robust Model with Stable and Object-Aligned Explanations (ECCV Workshop 2022)